- Cloud Security Newsletter

- Posts

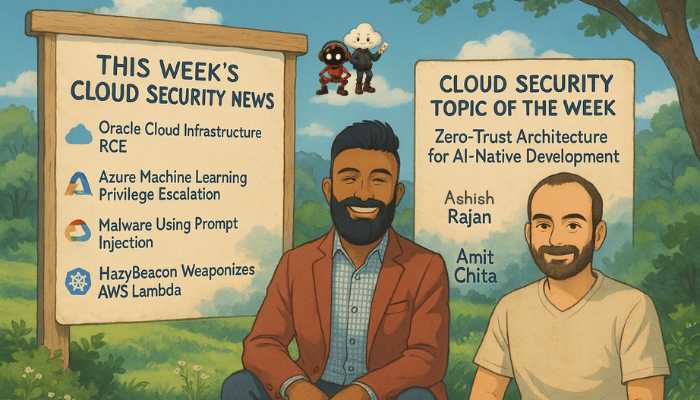

- 🚨 AI Dev Environments Under Siege: RCE in Oracle Cloud, Escalation in Azure ML, and Skynet Malware

🚨 AI Dev Environments Under Siege: RCE in Oracle Cloud, Escalation in Azure ML, and Skynet Malware

The era of AI-native security threats is here. This week’s cloud security incidents expose how development workflows powered by AI are breaking traditional assumptions and why security programs need to evolve rapidly. From Skynet’s prompt injection malware to privilege escalations “by design,” we unpack the real risks and the blueprint to navigate them. Featured insights from Amit Chita of Mend.io reveal how organizations must adapt their security programs for AI-native software development lifecycles, including new licensing challenges, prompt injection threats, and the evolution from reactive security to AI-powered remediation at enterprise scale.

Hello from the Cloud-verse!

This week’s Cloud Security Newsletter Topic we cover - Zero-Trust Architecture for AI-Native Software Development Lifecycles (continue reading)

Incase, this is your 1st Cloud Security Newsletter! You are in good company!

You are reading this issue along with your friends and colleagues from companies like Netflix, Citi, JP Morgan, Linkedin, Reddit, Github, Gitlab, CapitalOne, Robinhood, HSBC, British Airways, Airbnb, Block, Booking Inc & more who subscribe to this newsletter, who like you want to learn what’s new with Cloud Security each week from their industry peers like many others who listen to Cloud Security Podcast & AI Security Podcast every week.

Welcome to this week's edition of the Cloud Security Newsletter!

The convergence of AI-powered development and cloud infrastructure security has reached a critical inflection point. This week's security incidents from Oracle's Cloud Shell RCE vulnerability to the first documented malware using prompt injection against AI security tools—underscore how traditional AppSec approaches require fundamental adaptation for the AI-native era.

In this edition, we feature insights from Amit Chita, Field CTO at Mend.io, who brings deep expertise from building container security solutions at Atom Security (acquired by Mend) and implementing AI-native security platforms. Chita's perspective on securing AI-powered applications provides essential guidance for senior security professionals navigating this rapidly evolving landscape.

📰 TL;DR for Busy Readers

This Week's Bottom Line: AI-powered development environments are under active attack, with critical vulnerabilities in Oracle Cloud Shell and Azure ML exposing the inadequacy of traditional AppSec approaches. The first malware using prompt injection against AI security tools signals a new era of AI-specific threats.

Key Takeaways from Featured Guest:

Immediate Action Required: Verify Oracle Cloud Shell updates, review Azure ML storage controls, implement AWS Lambda URL monitoring

Strategic Shift Needed: Adopt zero-trust for all AI models and agents in your organization - assume they will be compromised

New Licensing Risk: AI introduces dual licensing complexity (model + data) that traditional open-source governance doesn't address

Speed Advantage: AI agents can fix security issues faster than humans, enabling stricter security policies without development friction

Attack Evolution: Prompt injection represents a new attack class requiring AI-specific red teaming and behavioral monitoring

Expert Insight: "Don't trust the AI models that you develop in the organization. Take a zero trust perspective on it. Assume that any AI-based software is gonna be breached." - Amit Chita, Mend.io

📰 THIS WEEK'S SECURITY HEADLINES

🔴 Oracle Cloud Infrastructure "OCI, Oh My" - RCE in Development Tools

What Happened: Tenable Research disclosed a Remote Code Execution vulnerability in Oracle Cloud Infrastructure's Code Editor service, enabling attackers to hijack Cloud Shell environments through a single-click attack. The vulnerability exploited CSRF on the Cloud Shell's router domain to deploy malicious payloads across OCI services including Resource Manager and Data Science platforms.

📌Why This Matters: This incident exposes critical attack vectors specific to cloud-integrated development environments. As Chita emphasizes, "Code is shipped much faster. You have an AI agent writing a full 10,000 lines of code in a few minutes. Attackers are faster. You need to defend faster." The vulnerability demonstrates how cloud service integrations create unexpected security dependencies that require specialized security considerations beyond traditional infrastructure protection.

Enterprise Impact: For organizations using Oracle's cloud development tools, this represents a fundamental shift in attack surface. The 1-click nature makes it particularly dangerous for social engineering campaigns targeting cloud administrators and developers who increasingly rely on browser-based development environments.

📚 Source: Tenable Research Advisory

⚠️ Azure Machine Learning Privilege Escalation - "By Design" Security Gap

What Happened: Orca Security researchers revealed a privilege escalation vulnerability in Azure Machine Learning that allows attackers with basic Storage Account access to execute arbitrary code and potentially compromise entire Azure subscriptions. Microsoft acknowledged the findings but stated this behavior is "by design."

📌Why This Matters: This vulnerability highlights a critical challenge in AI/ML workloads that Chita addresses directly: "What if I gave to my AI access to sensitive information? Can I really trust that it won't tell it to my customers? The answer is probably not." Default configurations in AI services often prioritize development speed over security, leaving enterprises exposed without explicit hardening measures.

Enterprise Impact: Under default SSO configurations, compute instances inherit creator-level access permissions. Researchers demonstrated escalation to "Owner" permissions on Azure subscriptions, enabling access to Azure Key Vaults and role assignments. This represents the exact scenario Chita warns against when discussing AI model trust boundaries.

📚 Source: Orca Security

🚨 First Malware Using Prompt Injection Against AI Security Tools

What Happened: Cybersecurity researchers discovered "Skynet" malware the first documented attempt to weaponize prompt injection attacks against AI-powered security analysis tools. The malware contains embedded prompt injection designed to manipulate AI models processing code samples during analysis.

📌Why This Matters: This marks the emergence of AI-specific attack vectors that Chita predicted: "Your model can read a website on the internet during operation and decide that it wants to hack your organization because someone convinced it." Organizations integrating AI into security workflows must implement additional validation layers and assume AI systems can be manipulated by sophisticated adversaries.

Strategic Implications: As organizations increasingly rely on AI-assisted threat analysis and automated security tools, this attack demonstrates why zero-trust approaches to AI models are essential. Traditional security tools now require protection against adversarial manipulation of their AI components.

📚 Sources: Check Point Research, Microsoft Azure Blog

🎯 "HazyBeacon" - State-Backed APT Weaponizes AWS Lambda for C2

What Happened: Unit 42 researchers revealed a sophisticated state-backed campaign targeting Southeast Asian government entities using AWS Lambda URLs for command and control communications. The technique makes malicious traffic appear as legitimate AWS API calls, evading traditional network detection.

📌Why This Matters: This represents the first documented use of AWS Lambda URLs for malicious C2 purposes, demonstrating how attackers exploit the trust and ubiquity of legitimate cloud services. The campaign employs a comprehensive "living off trusted services" approach using Google Drive and Dropbox for data exfiltration.

Detection Challenges: The technique creates "nearly impossible" network-based detection without deep behavioral analysis, requiring security teams to rethink monitoring strategies for legitimate cloud service usage patterns.

📚 Source: Unit 42, Palo Alto Networks

🎭 Scattered Spider Exploits SaaS Helpdesk Systems

What Happened: The Scattered Spider threat group used voice phishing and SaaS helpdesk impersonation to breach Qantas systems, exposing data on over 6 million users. The campaign exploited cloud SaaS integrations like CRMs and customer support systems with elevated access to customer data.

📌Why This Matters: This demonstrates how cloud SaaS integrations have become prime targets for sophisticated social engineering campaigns. The attack surface extends beyond traditional IT infrastructure to include business-critical cloud applications with privileged access to customer and internal systems.

📚Source: The Hacker News

🎯 Topic of the Week: Zero-Trust Architecture for AI-Native Software Development Lifecycles

The week's vulnerabilities and emerging threats underscore a fundamental shift: traditional AppSec approaches require comprehensive adaptation for AI-powered development environments. This goes beyond adding AI security tools to existing programs it demands rethinking trust boundaries, threat models, and remediation strategies for an era where AI agents generate code, review pull requests, and make security decisions.

Featured Experts This Week 🎤

Amit Chita - Field CTO, Mend.io

Ashish Rajan - CISO | Host, Cloud Security Podcast

Definitions and Core Concepts 📚

Before diving into our insights, let's clarify some key terms:

AI-Native Applications: Software programs where AI becomes the primary value delivery mechanism for customers, built around AI rather than having AI added as a secondary feature.

AI-Powered Applications: Any software program that utilizes AI (large language models, neural networks, or machine learning algorithms) behind the scenes to enhance functionality.

Model License vs. Data License: Two distinct licensing categories in AI development - model licenses governing the AI model itself, and data licenses covering the training data used to create the model.

Prompt Injection: A technique where attackers manipulate AI model inputs to override original instructions, potentially causing misclassification or unauthorized actions.

AI SBOM (Software Bill of Materials): An inventory document detailing AI components, models, and dependencies used in applications, essential for understanding AI-related security exposure.

Slopsquatting: A technique where attackers create malicious packages with names similar to legitimate ones, predicting common AI-generated naming mistakes to increase installation likelihood.

This week's issue is sponsored by Vanta.

Vanta’s Trust Maturity Report benchmarks security programs across 11,000+ companies using anonymized platform data. Grounded in the NIST Cybersecurity Framework, it maps organizations into four maturity tiers: Partial, Risk-Informed, Repeatable, and Adaptive.

The report highlights key trends:.

Only 43% of Partial-tier orgs conduct risk assessments (vs. 100% at higher tiers)

92% of Repeatable orgs monitor threats continuously

71% of Adaptive orgs leverage AI in their security stack

💡Our Insights from this Practitioner 🔍

1- Rethinking Security Programs for AI-Native Development

Chita's core message to security professionals is unambiguous: "Don't trust the AI models that you develop in the organization. Take a zero trust perspective on it. Assume that any AI-based software is gonna be breached, and then try to understand how do you find it the fastest and how do you minimize the impact when it happens?"

This philosophy extends beyond traditional threat modeling. In AI-native environments, the fundamental assumption that code written by humans can be trusted through review processes no longer holds. As Chita explains, "You have an AI agent writing a full 10,000 lines of code in a few minutes" - a scale and speed that renders traditional review processes inadequate.

2- The Licensing Complexity Challenge

One of the most underappreciated challenges in AI-powered development is licensing complexity. Chita reveals a critical distinction: "Now you have two kinds of licenses. You have the model license, and then you have the data license." This creates unprecedented complexity for enterprise security and legal teams.

The challenge extends beyond traditional open-source licensing. Meta's Llama model, for example, includes unusual restrictions: "If you have 500 million users, then you can't use the model." More concerning, many AI licenses include backward-compatibility clauses where "the license owner can decide tomorrow to change the license and it is backward compatible in certain licenses so they can decide that you can't use the model anymore."

For large enterprises managing thousands of repositories, this creates an observability crisis. As Chita notes, "When we come to a large enterprise, they have no idea what is going on. Let's imagine that you have 10,000 repos in organization... Whether they use DeepSeek whether they use Llama, probably they use both. Somewhere you don't know, where, you don't know who to talk to, even on which projects."

3- The Speed vs. Security Paradigm Shift

Traditional AppSec has always balanced security rigor against development velocity. AI fundamentally alters this equation. Chita observes: "Because a lot of the development is shifting to AI, fixing an issue sometimes can be closed within one AI task when it's a simple issue and then it's only a matter of computation or time. So people are more willing to do that."

This shift enables security teams to be more stringent with policies. "AI doesn't care if you give it 20 security issues. It might, but currently we know how to get it to do more work than a person will agree to do," Chita explains. The future state he envisions is compelling: security professionals will know exactly the cost to fix vulnerabilities because "it's gonna take my agent around 10 minutes" rather than negotiating with human developers.

4- New Attack Vectors Require New Defense Strategies

The emergence of AI-specific threats demands expanded threat modeling. Chita describes scenarios that sound like science fiction but represent real current risks: "Your model can read a website on the internet during operation and decide that it wanna hack your organization because someone convinced it."

The attack surface extends beyond direct manipulation. "Let's say I have an AI agent that takes cases from Salesforce, reads information from our documentation from Slack conversations and answers to the customer. What happens if the case of the customer is a prompt injection? Then the agent I have gets a prompt injection and then someone gains control over it."

This creates what Chita calls "indirect prompt injections" - attacks that can originate from any point where text is processed by an LLM. The business impact potential is severe, extending beyond traditional data breaches to manipulation of business logic and financial transactions.

5- Testing AI Systems: Beyond Traditional DAST

Traditional dynamic application security testing (DAST) approaches prove inadequate for AI systems. Chita frames AI red teaming as "the DAST for the AI world where you wanna just like DAST, you get a URL of a website and you try to attack it from the outside... In AI we get access to a model or AI agent and just try to exploit it from outside, like in a black box way."

However, AI testing requires fundamentally different approaches. "How do you test an AI model that can process any text? How do you test all the scenarios. You can't have 100% coverage," Chita explains. The solution involves using AI to generate attack variations: "AI can take one attack and turn it into 1000 variations, and then you can see, oh, 5% of them are working. So you can assess how secure" the system is.

6- Build vs. Buy in the AI Security Era

For security leaders evaluating AI security solutions, Chita provides clear guidance on the build-versus-buy decision. "In general, in the security space, when you build for yourself, you take a lot of risk that you are missing stuff. And also there's a lot of things that are not cost effective to build."

Specific areas where buying makes sense include licensing analysis: "No one wants to build their own database of what licenses are good. No one wants to pay for lawyers tons of money to understand all the licenses in the market. You want someone to do that for you... for any new license that comes up, for any new models that comes up."

However, certain capabilities make sense to develop internally: "You can configure your tools within the organization to have whatever security prompt or tools or limit their tooling or choose what models they use and configurations."

Threat Intelligence: Microsoft Azure AI Content Safety Documentation - Prompt Shields for defending against AI manipulation

Whitepaper: NIST AI Risk Management Framework - Comprehensive guidance for AI system security

Tools: OWASP Top 10 for LLM Applications - Essential security considerations for LLM implementations

Cloud-Native Guidance: CNCF AI Security Guidelines - Best practices for AI in cloud-native environments

Licensing Resource: AI Model License Tracker - Comprehensive database of AI model licensing terms

Question for you? (Reply to this email)

Have you seen Zero Trust in non-AI situations and believe that can be applied for AI Applications?

Next week, we'll explore another critical aspect of cloud security. Stay tuned!

We would love to hear from you📢 for a feature or topic request or if you would like to sponsor an edition of Cloud Security Newsletter.

Thank you for continuing to subscribe and Welcome to the new members in tis newsletter community💙

Peace!

Was this forwarded to you? You can Sign up here, to join our growing readership.

Want to sponsor the next newsletter edition! Lets make it happen

Have you joined our FREE Monthly Cloud Security Bootcamp yet?

checkout our sister podcast AI Security Podcast