- Cloud Security Newsletter

- Posts

- 🚨 DOJ clears Google Wiz Purchase: How Bloomberg Navigate AI-Powered Security at Scale

🚨 DOJ clears Google Wiz Purchase: How Bloomberg Navigate AI-Powered Security at Scale

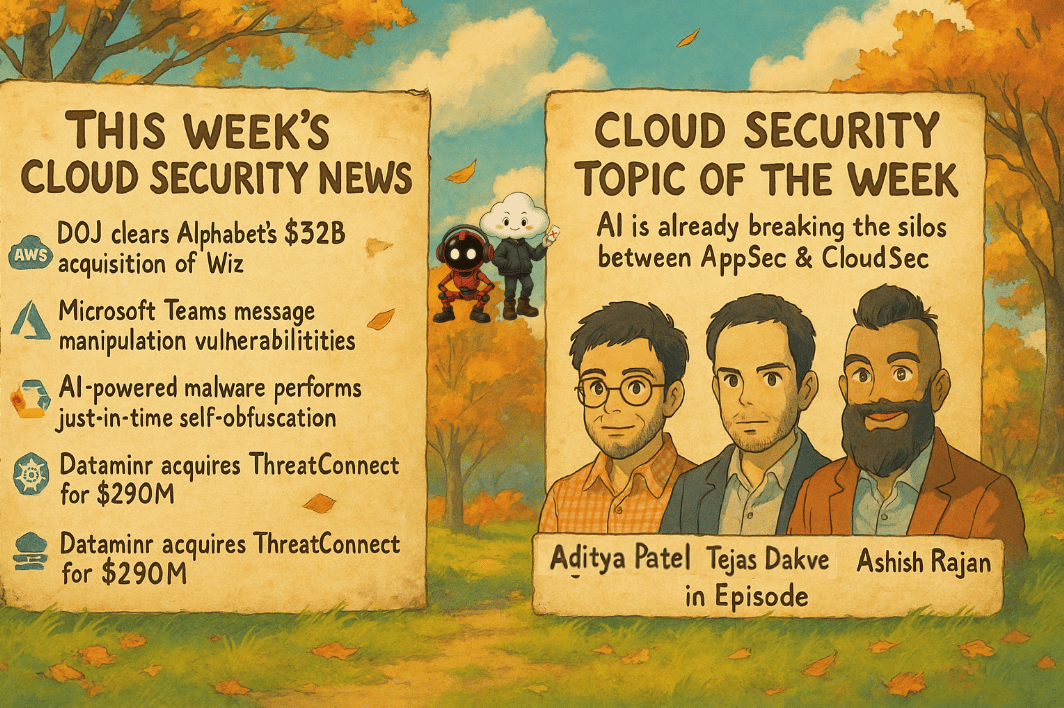

This week we explore breaking vulnerabilities in Microsoft Teams enabling message manipulation and caller ID forgery, AI-powered malware with self-modifying capabilities discovered by Google, and exclusive insights from Bloomberg's application security leader and cloud security architects on breaking down silos between AppSec and CloudSec teams as AI transforms the enterprise security landscape.

Hello from the Cloud-verse!

This week’s Cloud Security Newsletter Topic we cover - Breaking Down Silos: How AI Security Demands AppSec and CloudSec Convergence (continue reading)

Incase, this is your 1st Cloud Security Newsletter! You are in good company!

You are reading this issue along with your friends and colleagues from companies like Netflix, Citi, JP Morgan, Linkedin, Reddit, Github, Gitlab, CapitalOne, Robinhood, HSBC, British Airways, Airbnb, Block, Booking Inc & more who subscribe to this newsletter, who like you want to learn what’s new with Cloud Security each week from their industry peers like many others who listen to Cloud Security Podcast & AI Security Podcast every week.

Welcome to this week’s Cloud Security Newsletter

As AI reshapes application development and cloud security at an unprecedented pace, traditional security boundaries are dissolving. This week, we examine critical vulnerabilities in collaboration platforms that 320 million users depend on daily, alongside the emergence of AI-powered polymorphic malware that can dynamically evade detection. More importantly, we bring you a candid conversation with Tejas Dakve (Application Security Leader at Bloomberg Industry Group), Aditya Patel (Security Architect at a major cloud provider), and Ashish Rajan about how enterprises are breaking down the historical silos between application security and cloud security teams to address AI-native threats that refuse to respect traditional organizational boundaries.

The key insight: security teams can no longer operate as gatekeepers. As Tejas puts it, teams must transform "from department of no to department of safe yes" while building guardrails that enable rather than obstruct innovation.

📰 TL;DR for Busy Readers

Wiz x Google: DOJ antitrust cleared; expect deeper GCP ↔ Wiz coupling.

Teams flaws: Silent edits + spoofed identities → zero-trust verification for high-stakes comms.

Identity & Collab: Microsoft Teams spoofing/vulns mean treat chat as authoritative systems of record with controls.

M&A: Dataminr buys ThreatConnect ($290M) → external signals + internal intel.

AI-polymorphic malware: “PROMPTFLUX” queries Gemini for JIT obfuscation → behavior over signatures.

Supply chain / Dev: React Native CLI RCE (CVE-2025-11953) → protect dev laptops & CI

AppSec + CloudSec convergence required for AI security T-shaped engineers with cross-functional knowledge becoming the new baseline

Paved road solutions work: provide secure defaults and automated approval workflows instead of manual security gates

Agentic AI shifts focus from conversational threats to IAM policies and action-based permissions across cloud environments

📰 THIS WEEK'S SECURITY HEADLINES

1. DOJ clears Alphabet’s $32B acquisition of Wiz (antitrust review)

What Happened

The U.S. Department of Justice(DOJ) approved Alphabet/Google's planned $32B purchase of Wiz, removing the last major regulatory hurdle. This is the largest security deal on record and cements Google's push to own more of the enterprise cloud security stack.

Why it matters (practitioner take):

Expect tighter Wiz ↔ Google Cloud integrations (posture/CNAPP/AI-assisted detections).

Re-check vendor concentration and dual-vendor exits in FY26 plans.

Do this now

Board brief: “Wiz x Google—integration paths and lock-in risk.”

Risk register: Add vendor-exit play + multi-cloud coverage test.

Red team tasker: Simulate GCP-native ↔ Wiz control gaps during M&A integration.

2. Microsoft Teams vulns allow message manipulation & exec impersonation

What happened: Check Point disclosed four Teams issues enabling silent edits, spoofed identities, and forged call/caller notifications; one tracked as CVE-2024-38197; fixes landed through 2024–Oct 2025.

Why it matters: Teams is an authoritative system of record for many workflows (approvals/IR/chatOps). Silent history edits + spoofed senders undermine financial approvals, IR channels, and change control.

Do this now

Policy: Treat chat like code → require out-of-band verification for $$/secrets.

Controls: Inline DLP/Malware scanning for Teams file/message payloads.

Detection: Alert on retroactive edits in high-risk channels (finance/IR).

Admin: Confirm October 2025 client/service updates are deployed org-wide.

3. AI-powered malware (“PROMPTFLUX”) performs JIT self-obfuscation

What happened: Google Threat Intelligence tracked PROMPTFLUX, an experimental VBScript dropper that calls Gemini to generate new evasion code at runtime. Also notes other families abusing LLMs.

Why it matters: First publicly documented use of LLM JIT obfuscation static signatures will lag; behavioral + API telemetry becomes table stakes.

Do this now

EDR: Prioritize behavioral rules (script child-process anomalies, LOLBins).

Egress: Monitor/alert on unexpected LLM API calls from endpoints/servers.

Hunt: Search for metamorphic VBS with periodic network beacons to AI APIs.

Background: Google’s prior work on Gemini for large-scale malware analysis

4. Major M&A: Dataminr Acquires ThreatConnect for $290M

What happened: Dataminr combines external signals with ThreatConnect’s intel/TIP; SecurityWeek logged 45 cyber M&A deals in Oct 2025; Veeam–Securiti also announced.

Why it matters: Expect agentic correlation (external+internal) and recommendations in SOC stacks; fewer point tools, more platform workflows.

Do this now

SOC roadmap: Define intel→detection→response data flows before tooling.

Contracting: Tie SLAs to false-positive budgets + MTTD improvements.

Privacy: Re-review data residency for external signal ingestion.

5. Critical React Native CLI Vulnerability Exposes 2 Million Weekly Users

What happened: JFrog disclosed command injection in @react-native-community/cli-server-api; Metro dev server binds to all interfaces by default, making a local bug remotely exploitable. Affects 4.8.0 → <20.0.0; fixed in 20.0.0.

Why it matters: Developer laptops and CI agents become cloud pivots; supply chain risk.

Do this now

Patch: Pin @react-native-community/cli-server-api ≥ 20.0.0 (every repo).

Network: Isolate dev subnets; block Metro ports from WAN/LAN cross-talk.

CI: Rotate tokens/SSH keys; scan for indicators of compromise on dev hosts.

🎯 Cloud Security Topic of the Week:

Breaking Down Silos: How AI Security Demands AppSec and CloudSec Convergence

The rise of AI-native applications is forcing a fundamental reckoning with how security teams are structured and operate. For years, application security and cloud security have existed as distinct disciplines with separate tools, threat models, and areas of responsibility. But as AI becomes deeply embedded into both application logic and cloud infrastructure, this separation is no longer tenable.

The challenge: Agentic AI crosses app logic, IAM, data, and multi-cloud APIs two separate threat models that interlock.

Field lessons (Bloomberg + Cloud Architects):

Move from gates → guardrails: pre-approved “paved road” paths with automated checks.

Treat agents like non-human workloads: identity-first design; least-privilege scopes.

Continuous threat modeling: automated prompts + human in loop; update as threats evolve.

Build T-shaped teams: deep specialty + broad fluency (AppSec understands IAM; CloudSec groks prompt/LLM issues).

Starter blueprint

Org: Cross-functional pods (AppSec, CloudSec, Data, Platform, Compliance, Product).

Identity: Agent roles with action-scoped permissions; rotate secrets from a vault; no static MFA hacks.

Pipelines: Governance-as-code checks + AI eval gates; approvals in hours, not weeks.

Ops: AISPM mindset assets: datasets, models, agents, embeddings, endpoints.

Featured Experts This Week 🎤

Tejas Dakve - Senior Manager Application Security, Bloomberg Industry Group

Aditya Patel - Security Architect,

Ashish Rajan - CISO | Co-Host AI Security Podcast , Host of Cloud Security Podcast

Definitions and Core Concepts 📚

Before diving into our insights, let's clarify some key terms:

Agentic AI: LLMs that act (APIs/emails/resources), not just chat.

T-Shaped Engineer: Deep in one, conversant across adjacent domains.

Paved Road: Secure-by-default patterns + auto approvals.

JIT AI Malware: Malware that calls LLMs at runtime for new obfuscation.

CVE-2025-11953: React Native CLI RCE via Metro server exposure.

This week's issue is sponsored by Dropzone

New independent research from Cloud Security Alliance proves AI SOC agents dramatically improve analyst performance.

In controlled testing with 148 security professionals using Dropzone AI, analysts achieved 22-29% higher accuracy, completed investigations 45-61% faster, and maintained superior quality even under fatigue.

The study reveals that 94% of participants viewed AI more positively after hands-on use. See the full benchmark results.

💡Our Insights from this Practitioner 🔍

AI is already breaking the Silos Between AppSec & CloudSec(Full Episode here)

The Volume Problem: AI-Generated Code Overwhelms Traditional Security Gates

The conversation began with a stark reality check about the scale challenge facing security teams. As Tejas Dakve explains, "The speed of writing the code and the volume of code that security teams have to secure is unparallel. It's absolutely impossible to use our traditional security gates where security teams used to be a gate checker."

This isn't hyperbole. When developers leverage AI coding assistants like GitHub Copilot or Cursor, they're producing code at 3-5x their previous velocity. But security teams haven't scaled proportionally. The math simply doesn't work anymore for manual review processes.

Aditya Patel reinforces this point with a practical example: "The problem is now we have to acknowledge the fact that new attacks are being introduced or new sort of architectural considerations that need to be there for multi-agent or Agentic workflows." Traditional threat modeling frameworks like STRIDE or PASTA don't address prompt injection, data poisoning, or model theft threats that require specialized knowledge.

The solution both practitioners advocate? Automated, continuous threat modeling paired with "paved road" solutions that provide secure defaults developers can adopt without friction.

From "Department of No" to "Department of Safe Yes"

Perhaps the most striking cultural insight came from Tejas Dakve's reframing of security's role: "Security leaders we have traditionally been recognized as a department of no. I think we have to change our mindset from department of no to department of safe yes."

What does "safe yes" look like in practice? It means building guardrails instead of gates. When a developer wants to use an open-Read More LLM model from Hugging Face, the old model required a two-week security review. The new model involves an automated request workflow that:

Triggers automated scans for LLM-specific vulnerabilities

Evaluates model resiliency and hallucination risks

Routes to compliance and legal for licensing review

Provides approval within hours, not weeks

As Tejas emphasizes, "We have to take security into the AI lifecycle the way we took security and baked it into CI/CD pipelines." This isn't about lowering security standards it's about automating the evaluation and approval processes so velocity doesn't suffer while maintaining rigor.

The Silo Problem: When AppSec and CloudSec Can't See the Full Picture

One of the most illuminating moments in the discussion revealed how organizational silos create blind spots for AI security. Tejas described a common scenario: "There is a gap between application security and cloud security when it comes to agentic AI, because AppSec cares about source code, logic, things like that. But we don't always have visibility into roles, permissions, policies associated with that agent."

This is where the traditional division breaks down. An AppSec team threat modeling an AI agent might focus on prompt injection and output validation critical concerns, but incomplete. Meanwhile, the cloud security team owns IAM policies but may not understand what actions the agent is designed to perform or what data it accesses.

Aditya Patel offers a concrete distinction: "For all practical purposes, these are two different threat models. There's a threat model for the underlying platform or the cloud, and then there's a threat model for the application itself."

The solution? Cross-functional pods that bring together AppSec, CloudSec, data security, compliance, and product engineering. As Tejas notes, "These teams need to come together, and only then we can have some sort of AI governance within an organization before Shadow AI starts taking place everywhere."

Agentic AI: The Authentication Challenge Nobody Talks About

When the conversation turned to agentic workflows, Aditya Patel highlighted a technical challenge that many organizations haven't fully grasped. Traditional authentication mechanisms assume a human user. But what happens when an AI agent needs to authenticate across five or ten different endpoints, some internal and some external?

"For authentication, you can authenticate using something you know and something you have," Aditya explains. "For an agent, it needs to pass a secret password or certificate to authenticate itself. Hopefully it's not hard coded. So hopefully there's an API call where it goes to a vault or a secrets manager and fetches the credential."

But it gets more complex: "What about if there is an MFA? Then it needs to have a way of getting the OTPs from somewhere. And one more thing you need to keep in mind is bot detection or CAPTCHAs. It needs to solve CAPTCHAs and because most things like Akamai or CloudFront or Cloudflare, they have automated bot detection."

This technical reality underscores why Tejas emphasizes IAM policies as the critical security control for agentic AI: "With agentic AI, the IAM role policies, permissions associated with that agent, becomes a very important factor. My focus would be more on what are IAM roles and permissions and policies associated with it. What can go wrong if that agent gets controlled by some malicious actors?"

The Skills Evolution: T-Shaped Engineers and Security Generalists

Both practitioners were candid about how the expectations for security professionals have evolved and how overwhelming it can feel. Tejas laid out the progression: "Before introduction of AI, security engineers were expected to know core AppSec concepts like threat modeling, web penetration testing, mobile related threats. Then came along cloud security 10 years ago. Then in between came DevSecOps. Couple of years back, AI came into the limelight."

The solution isn't to become a "jack of all trades" both emphasized the importance of depth. Instead, they advocate for "T-shaped engineers": professionals with deep expertise in one domain (the vertical bar of the "T") but broad understanding across multiple areas (the horizontal bar).

Aditya drew an analogy from David Epstein's book "Range," contrasting Tiger Woods (who played only golf from age two) with Roger Federer (who played everything before focusing on tennis as a teenager). "There are two types of environments," Aditya explains. "One is like a very deterministic environment like chess or music, or even golf. But the other one is more like a non-deterministic environment: tennis or science, arts, or cybersecurity. If you have the multidisciplinary background training there, that helps."

The practical advice for security leaders? Create paths for cross-functional learning and reward breadth as well as depth. Tejas adds, "We have to develop T-shaped engineers. We want security engineers who are experts in their own domain, but they should also be able to connect dots between various different sections."

Automated Threat Modeling: The GitHub Copilot Example

To illustrate why periodic threat modeling matters for AI systems, Tejas offered a compelling case study: "GitHub Copilot when it got rolled out, almost every organization did some sort of threat modeling before they introduced it into their development ecosystem. Then a few months back, a researcher released a paper saying that GitHub Copilot can be tricked to introduce backdoor into your product."

This emergence of new threats makes previous threat models obsolete. The solution? Automated, continuous threat modeling that evolves with the threat landscape. And yes, using AI for this purpose. As Aditya suggests: "Use AI for it, right? You can just ask your chatbot to give you top 10 threats. This is my architecture, these are my business cases, use cases, technical use cases. Give me what threats to expect."

But both practitioners emphasized a critical caveat: human expertise remains essential. Aditya warns, "I don't think we are at a point where we can completely rely on these systems. There has to be a human in the loop from a security point of view." The term he uses for AI-generated outputs is memorable: "These chatbots are dreaming up the internet. They have read the internet, and now they are word by word, they're dreaming up things."

Prioritization at Scale: The Quality Over Quantity Shift

With thousands of vulnerabilities flooding security dashboards, how do teams decide what to fix? Tejas advocates for a pipeline approach: "Accumulate all vulnerabilities onto one single platform, a posture management type solution. Create a pipeline from all vulnerabilities to just top 5% or 1% of vulnerabilities that matter to you the most."

The factors in this pipeline include:

Applicable and reachable vulnerabilities

EPSS scores and CISA KEV advisories

Internet-facing versus internal-only

Proof of concept availability

Actual exploitation paths

The mindset shift is crucial: "Your CTO is not going to care about the fact that you fixed a hundred vulnerabilities. What if half of those vulnerabilities are internal only or have no exploitation path? Fix only five vulnerabilities which are internet facing with highest likelihood of exploitation."

Aditya adds a cloud-specific dimension: separating environments by velocity requirements. "You can have more restrictive IAM policies in regulated or business-critical workloads. But you also need environments where the restrictions are not as much, where velocity needs to be higher, so developers don't have to come back to the security team."

Advice for Those Starting Their AI Security Journey

For organizations just beginning their AI security maturity journey, both practitioners offered pragmatic guidance. Aditya drew a memorable analogy: "AI is on a similar curve to the five stages of grief denial, anger, bargain, depression, and acceptance. Some companies are on the denial stage still, but most have moved past that stage. Very few have reached the acceptance stage."

The first step? Acceptance that AI is here to stay and requires dedicated attention. From there, mature organizations are:

Building paved road solutions with secure defaults

Investing in specialized tooling for AI-specific threats

Reorganizing teams into cross-functional pods

Creating risk matrices specific to AI threats

Developing remediation libraries for common AI security issues

Establishing AI governance through clear policies

Tejas emphasizes governance as the foundation: "Mature organizations have gone ahead and established AI governance through some sort of AI related policy. That clearly describes what is okay, what is not okay, and how to pursue what is okay."

For individuals looking to transition into AI security, Aditya recommends working backward from job market requirements: "Don't create this ideal plan that, 'Hey, I will learn only AppSec, or I will learn AppSec first and then CloudSec.' It's a security generalist type of role. You need to know a bit about everything."

Tejas adds practical career advice for those struggling to break into security: "Look for other entry level roles: IT help desk, tier one or tier two support, network engineer. You don't have to start in cybersecurity. Those skills like how to network, how to communicate, how to troubleshoot are important in cybersecurity as well."

The Bottom Line

AI security isn't a separate discipline; it's forcing the convergence of application security, cloud security, data security, and compliance into unified, cross-functional teams. The silos that worked when applications and infrastructure were separate are breaking down because AI systems span both layers intrinsically.

Security leaders must shift from gatekeepers to enablers, building automated guardrails that allow innovation at AI speed while maintaining rigorous security standards. The challenge is significant, but as both Tejas and Aditya emphasize, the fundamentals haven't changed authentication, authorization, encryption, and defense in depth still matter. What's changed is the attack surface, the velocity of development, and the need for security professionals who can bridge multiple domains.

Question for you? (Reply to this email)

🤖 Are your AppSec and CloudSec still siloed or are you already building T-shaped pods with agent-aware IAM and paved roads?

Next week, we'll explore another critical aspect of cloud security. Stay tuned!

📬 Want weekly expert takes on AI & Cloud Security? [Subscribe here]”

We would love to hear from you📢 for a feature or topic request or if you would like to sponsor an edition of Cloud Security Newsletter.

Thank you for continuing to subscribe and Welcome to the new members in tis newsletter community💙

Peace!

Was this forwarded to you? You can Sign up here, to join our growing readership.

Want to sponsor the next newsletter edition! Lets make it happen

Have you joined our FREE Monthly Cloud Security Bootcamp yet?

checkout our sister podcast AI Security Podcast