- Cloud Security Newsletter

- Posts

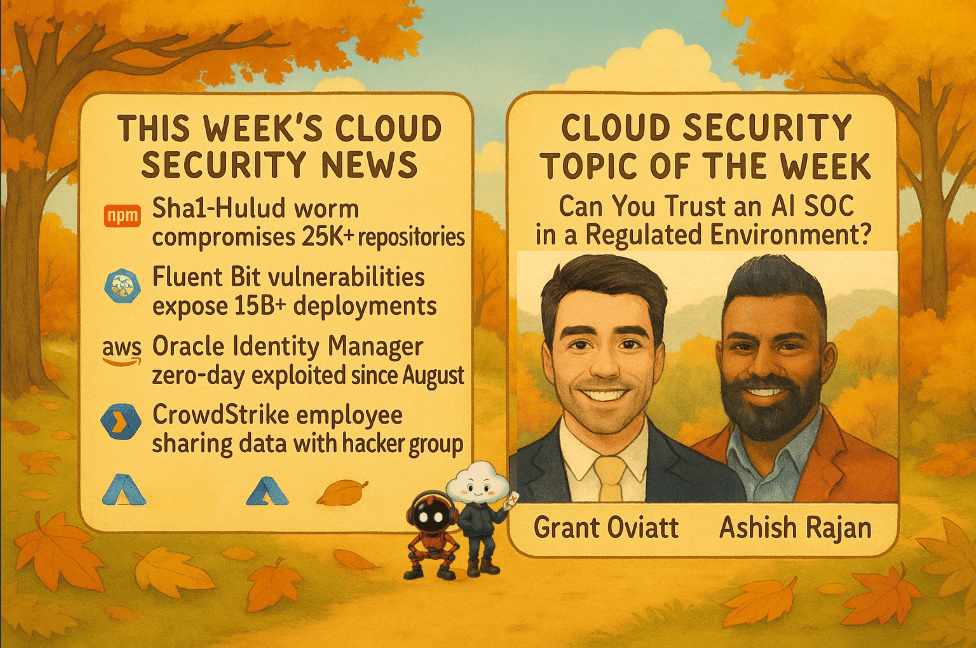

- 🚨 Sha1-Hulud Worm Exposes 25K+ Repos: Lessons from Building Trustworthy AI SOCs For Regulated Environments

🚨 Sha1-Hulud Worm Exposes 25K+ Repos: Lessons from Building Trustworthy AI SOCs For Regulated Environments

This week: Supply chain attacks compromise enterprise CI/CD pipelines as 600+ npm packages fall to self-replicating malware. Former Mandiant SOC leader Grant Oviatt reveals how Prophet Security achieves 99.3% investigation accuracy with AI agents in regulated environments completing triage in 4 minutes versus traditional teams' multi-hour cycles. Learn the architecture requirements for explainability, traceability, and data sovereignty that regulators demand from AI-driven security operations.

Hello from the Cloud-verse!

This week’s Cloud Security Newsletter Topic we cover - Can You Trust an AI SOC in a Regulated Environment? (continue reading)

Incase, this is your 1st Cloud Security Newsletter! You are in good company!

You are reading this issue along with your friends and colleagues from companies like Netflix, Citi, JP Morgan, Linkedin, Reddit, Github, Gitlab, CapitalOne, Robinhood, HSBC, British Airways, Airbnb, Block, Booking Inc & more who subscribe to this newsletter, who like you want to learn what’s new with Cloud Security each week from their industry peers like many others who listen to Cloud Security Podcast & AI Security Podcast every week.

Welcome to this week’s Cloud Security Newsletter

The convergence of sophisticated supply chain attacks and AI-driven security operations creates both unprecedented risk and opportunity for enterprise defenders. This week, we examine the Sha1-Hulud 2.0 worm that compromised 25,000+ GitHub repositories while exploring how organizations are successfully deploying AI Security Operations Centers (AI SOCs) in highly regulated environments.

This week is all about trust:

Trust in your supply chain: Sha1-Hulud 2.0 tearing through npm and 25K+ GitHub repos.

Trust in your telemetry: Fluent Bit RCE and log tampering across 15B+ deployments.

Trust in your identity tier: Oracle Identity Manager pre-auth RCE exploited since August.

Trust in your SOC: as AI agents move from toy demos to front-line investigations in regulated environments.

Our featured expert this week is Grant Oviatt, Head of Security Operations at Prophet Security and former SOC leader at Mandiant and Red Canary. Grant brings over 15 years of experience in security operations and shares his journey, who went from an AI skeptic to running an AI SOC handling 12K+ investigations in 2 weeks with 99.3% agreement with humans and ~4-minute investigation times.

Together, they explore a question many of you are wrestling with right now: Can you put an AI SOC in front of regulated workloads and sleep at night? [Listen to the episode]

📰 TL;DR for Busy Readers

Sha1-Hulud 2.0 worm compromised 600+ npm packages with persistent GitHub Actions backdoors rotate all CI/CD credentials immediately

Fluent Bit vulnerabilities (8+ years old) threaten 15B+ cloud deployments attackers can manipulate logs while executing code

AI SOC maturity ( with Grant Oviatt) now enables 4-minute investigations with 99.3% accuracy in regulated environments through explainability and traceability

Data sovereignty controls allow regulated customers to host AI SOC data planes in their own cloud with single-tenant architecture

Oracle Identity Manager zero-day exploited since August IAM compromises provide "keys to the kingdom" across hybrid environments

📰 THIS WEEK'S TOP 5 SECURITY HEADLINES

1. Sha1-Hulud 2.0: Self-Replicating npm Worm Compromises 25,000+ Repositories

Between November 21-23, a second wave of the Sha1-Hulud supply chain attack compromised over 600 npm packages, including popular tools from Zapier, PostHog, ENS Domains, and Postman. The malware has infected 25,000+ GitHub repositories across approximately 500 users, with cross-victim credential exfiltration actively occurring. This evolved variant now installs self-hosted GitHub Actions runners on infected systems, providing persistent backdoor access that survives reboots, while harvesting credentials from AWS, GCP, and Azure environments across 17 AWS regions.

Why This Matters to Cloud Security Leaders:

This represents a fundamental escalation in software supply chain attacks, directly threatening enterprise CI/CD pipelines and cloud infrastructure. This worm isn’t “just another” npm typo-squatting event. It directly targets:

CI/CD runners – Execution happens in preinstall, i.e., before dependencies resolve, when your build agents and secrets are fully exposed.

Cloud control planes – It reaches into AWS/GCP/Azure creds across 17 AWS regions and exfiltrates them to attacker-controlled repos.

GitHub Actions fleet – The worm installs self-hosted runners as durable, authenticated control channels into your infra.

If your org consumes affected packages some present in ~27% of code/cloud environments in sampled scans you may have both a supply-chain compromise and a cloud persistence problem.

Immediate Actions:

Start by scanning all endpoints for affected packages, rotate ALL credentials (npm tokens, GitHub PATs, SSH keys, AWS/GCP/Azure credentials),

CI/CD lockdown

Disable or tightly restrict preinstall/postinstall lifecycle scripts in build environments.

Treat build agents like production workloads: restrictive egress, zero trust to GitHub, npm, etc.

Incident playbook

Cross-check all repos for "Sha1-Hulud: The Second Coming" and suspicious self-hosted runners (e.g., SHA1HULUD).

Rotate everything: npm tokens, GitHub PATs, SSH keys, cloud credentials used on any affected machine.

AI SOC tie-in

This is exactly the sort of large-scale, high-noise event where AI SOC triage can shine auto-enriching build logs, access logs, and GitHub audit trails to spot cross-tenant token reuse that humans would burn out on.

Read More: Wiz Research | Mend.io Analysis | Snyk Advisory | The Hacker News

2. Critical Fluent Bit Vulnerabilities Expose 15+ Billion Cloud Deployments

Oligo Security disclosed five critical vulnerabilities in Fluent Bit, the open-source logging agent deployed over 15 billion times across AWS, Google Cloud, Azure, and Kubernetes environments. The most severe flaw, CVE-2025-12972, is a path-traversal vulnerability that has existed for over 8 years, allowing attackers to write or overwrite arbitrary files for remote code execution. Additional vulnerabilities enable authentication bypass, log tampering, tag spoofing, and stack buffer overflow attacks.

Why This Matters to Cloud Security Leaders:

Fluent Bit sits at the heart of cloud observability infrastructure, processing logs, metrics, and traces before they reach SIEM platforms and detection systems. The tool is embedded in Kubernetes clusters, AI labs, banks, and all major cloud providers, making these vulnerabilities a systemic risk to cloud ecosystems.

Fluent Bit sits between your workloads and your SIEM/XDR. If it’s compromised, an attacker can:

Hijack the node (DaemonSet → host-level RCE → cluster pivot).

Silence or rewrite incriminating logs to defeat detection.

Inject fake telemetry to mislead incident responders and AI-driven analytics.

That last bullet is especially relevant if you’re experimenting with LLM-based detection. If your logging substrate can be coerced into lying, AI just makes you confidently wrong, faster.

The attack surface is particularly concerning for enterprise defenders: attackers could execute malicious code through Fluent Bit while dictating which events are recorded, erasing or rewriting incriminating entries to hide their tracks, injecting fake telemetry, and injecting plausible fake events to mislead responders. Because Fluent Bit is commonly deployed as a Kubernetes DaemonSet, a single compromised log agent can cascade into full node and cluster takeover. This directly connects to Grant Oviatt's discussion on traceability: if your logging infrastructure can be manipulated, the entire chain of evidence for AI SOC investigations becomes unreliable.

The disclosure process itself revealed systemic issues: Despite multiple responsible disclosure attempts through official channels, it took over a week and the involvement of AWS before the vulnerabilities received sustained attention.

Immediate Actions:

Patch or replace immediately

Upgrade all agents to v4.1.1 or v4.0.12; use AWS Inspector / Security Hub / Systems Manager to locate vulnerable nodes at scale.

Harden the log plane

Lock Fluent Bit configs as read-only where possible.

Use static tags + fixed paths to reduce spoofing.

Limit network access for Fluent Bit outputs to known log backends only.

Monitor for integrity issues

Add controls to detect tag spoofing, missing logs, or anomalous routing changes from Fluent Bit pods.

Read More: Oligo Security Report | CSO Online | The Hacker News

3. Oracle Identity Manager Zero-Day Exploited in the Wild Since August

CISA added CVE-2025-61757 to its Known Exploited Vulnerabilities catalog on November 21, a critical pre-authentication remote code execution flaw (CVSS 9.8) in Oracle Identity Manager. Searchlight Cyber researchers discovered active exploitation dating back to at least August 2025, possibly as a zero-day before Oracle's October 2025 patch. The vulnerability affects Oracle Identity Manager versions 11.1.2.3 and 12.2.1.3, allowing unauthenticated attackers to compromise systems over the network without user interaction.

Why This Matters to Cloud Security Leaders:

Oracle Identity Manager is a critical component of enterprise IAM infrastructure, deployed across government agencies and large enterprises. The pre-authentication nature of this vulnerability means attackers can compromise systems without any credentials, making it a prime target for initial access. Given the centralized nature of IAM systems, a compromise here could provide attackers with "keys to the kingdom" across cloud and on-premises environments.

The extended exploitation timeline is particularly concerning: evidence suggests the vulnerability may have been exploited as a zero-day before Oracle's October patch, giving attackers months of undetected access. CISA's inclusion in the KEV catalog indicates active targeting of federal systems, with federal agencies required to patch by December 12, 2025.

OIM is core IAM infrastructure key points:

Pre-auth RCE on OIM is effectively a full identity tier compromise.

Given the months-long exploitation window, assume adversaries have used it as an initial access + persistence vector.

CISA has mandated US federal agencies patch by 12 Dec 2025, signaling active exploitation at scale.

Immediate Actions: Apply Oracle's October 2025 Critical Patch Update immediately, conduct forensic review of Oracle Identity Manager logs dating back to August 2025, look for suspicious authentication patterns or privilege escalations, and review all identity provisioning activities during the exploitation window.

Key points:

Patch OIM with Oracle’s October 2025 CPU as an emergency change.

Assume breach since Aug 2025:

Review OIM logs for anomalous authentications, provisioning events, and privilege escalations.

Treat OIM as a suspected initial access vector in any ongoing IR investigations.

Read More: BleepingComputer | SecurityWeek | The Hacker News

4. CrowdStrike Terminates Insider Sharing Data with Hacker Group

CrowdStrike terminated an employee on November 21 for sharing internal screenshots with the hacker group "Scattered Lapsus$ Hunters" on Discord. The terminated employee had access to CrowdStrike's Slack and Confluence systems but did not have access to production systems or customer data. CrowdStrike emphasized that no systems were breached and the incident involved an authorized employee sharing limited internal screenshots.

Why This Matters to Cloud Security Leaders:

This incident highlights the persistent insider threat risk facing cybersecurity vendors and cloud service providers. While CrowdStrike successfully detected and responded to the threat, the incident demonstrates how social engineering can target even security-conscious organizations. The hacker group attempted to use the screenshots as proof of a broader compromise, amplifying the incident through social media, a tactic that's increasingly common and can cause reputational damage disproportionate to actual risk.

For enterprise security leaders, this serves as a reminder that insider threats don't always involve malicious intent from the outset. Employees can be manipulated into sharing information that seems innocuous but provides attackers with reconnaissance value. The targeting of CrowdStrike, months after the company's July 2025 global IT outage, suggests adversaries are opportunistically targeting organizations during periods of heightened scrutiny.

Key Takeaways: Implement robust insider threat programs with behavioral analytics, educate employees on social engineering tactics targeting internal communications, restrict access to internal collaboration tools based on need-to-know principles, monitor for unauthorized data exfiltration from collaboration platforms, and have crisis communication plans for insider threat incidents.

Key Points

Expand insider threat monitoring to include collaboration platforms and screenshot-heavy workflows.

Train staff that “just screenshots” can still be sensitive.

Ensure crisis comms plans explicitly cover insider-driven “near miss” incidents.

Read More: TechCrunch | BleepingComputer

5. SEC Drops SolarWinds Case After Years of Legal Battle

The SEC voluntarily dismissed its securities fraud lawsuit against SolarWinds and CISO Timothy Brown on November 20, ending a case filed in October 2023 over alleged misrepresentations about cybersecurity practices before the 2020 supply chain breach. The dismissal came after a federal judge ruled in July 2025 that most of the SEC's claims could not proceed, narrowing the case significantly.

Why This Matters to Cloud Security Leaders:

The SEC's decision to abandon the SolarWinds case represents a significant shift in regulatory enforcement around cybersecurity disclosures. While this may bring relief to many companies and CISOs concerned about the chilling effect on proactive security work, organizations must still proceed carefully when making public statements about their security programs.

In the wake of cyber incidents, federal, state, and international regulators may scrutinize cybersecurity disclosures as evidence of negligence. This includes the SEC's 2023 requirements for companies to disclose material cyber risks and incidents to investors. Effective governance around drafting and vetting cybersecurity statements and disclosures remains critical for public companies.

Strategic Implications: Review public-facing security statements for accuracy and consistency with actual practices, ensure board-level oversight of cybersecurity risk disclosures, maintain detailed documentation of security investments and improvements, and prepare for continued regulatory scrutiny even with shifting enforcement priorities.

Key Points

The dismissal is widely seen as a setback to the SEC’s attempt to pursue personal liability for CISOs around security disclosures.

But it should not be read as a retreat from cyber disclosure expectations if anything, it will refocus regulators on cases with clearer evidence trails.

For cloud-heavy enterprises, this reinforces the need for defensible, documented risk narratives around supply chain, cloud concentration, and AI usage not marketing-driven security postures.

Read More: Inside Privacy | The Hacker News | Cybersecurity Dive

🎯 Cloud Security Topic of the Week:

Can You Trust an AI SOC in a Regulated Environment?

This week’s theme: if you want AI-assisted SOC in a regulated org, you have to design for trust first, not bolt it on later.

The 3 trust pillars for regulated AI SOCs

Explainability – Every conclusion must read like a senior analyst’s notes, not an opaque score.

Traceability – Line-by-line evidence of every query, API call, and log used in the investigation.

Sovereignty – A data plane that can live in the customer’s cloud, with single-tenant isolation and BYO-model gateways.

Our takeaway

If you can’t:

Explain why an alert was closed,

Show every step the AI took, and

Prove where the data lived and who controlled it,

…you don’t have a regulated-ready AI SOC yet. You have an experiment. [Listen to the full Episode: Grant Oviatt on building a regulated-ready AI SOC]

Featured Experts This Week 🎤

Grant Oviatt - Head of Security Operations, Prophet Security

Ashish Rajan - CISO | Co-Host AI Security Podcast , Host of Cloud Security Podcast

Definitions and Core Concepts 📚

Before diving into our insights, let's clarify some key terms:

AI SOC (AI Security Operations Center): A security operations center that leverages artificial intelligence agents to perform triage, investigation, and response activities traditionally handled by human security analysts. Modern AI SOCs use large language models and specialized agents to query security tools, analyze logs, and make investigative decisions with human oversight.

Explainability: In the context of AI SOC operations, explainability refers to the ability to understand and articulate how an AI agent reached a specific decision. This includes visibility into the reasoning process, the data considered, and the logic applied similar to how a human analyst would explain their investigative conclusions.

Traceability: The ability to track the complete lineage of data and decisions from raw inputs through transformation and analysis to final outputs. In AI SOC environments, this means documenting every query issued, every API call made, every log examined, and every decision point in an investigation

Single-Tenant AI SOC Architecture: A deployment model where each customer's data, processing, and AI models operate in complete isolation from other customers. This ensures no cross-contamination of data and allows customers to maintain complete control over their security evidence and analysis.

Data Plane: The component of a system that handles the actual processing

and storage of customer data, as distinct from the control plane that manages configuration and orchestration. In AI SOC contexts, customers in regulated industries often require the data plane to reside within their own cloud environment for sovereignty and compliance.

Model Gateway (BYO model): An org-controlled proxy in front of LLMs that enforces which models can see what data (e.g., no PHI to public models), logs every prompt/response, and allows AI SOC traffic to flow through the same AI governance stack as your product workloads.

This week's issue is sponsored by Brinqa

What If You Could See Risk Differently?

On Nov 19, Brinqa experts will show how a shift in perspective, adding context, can change everything about how you prioritize risk. Fast-paced, real, and surprisingly fun.

💡Our Insights from this Practitioner 🔍

How to Build Trust in an AI SOC for Regulated Environments (Full Episode here)

The Evolution from AI Skeptic to AI SOC Advocate

Grant Oviatt's journey from AI skeptic to building enterprise-scale AI SOC solutions mirrors the transformation happening across the security operations industry. "If I think of 15 years ago with AI, anything AI detection in it scared me to death and I was very skeptical," Grant explains. "I knew it was gonna be the highest false positive in the entire company."

What changed? Grant's perspective shifted when he began experimenting with breaking down security investigations into composable pieces that AI agents could tackle with high consistency. "When it was great, it was great," he notes. "It started becoming more of a consistency problem of how can you chunk up this problem of doing a security investigation that security operators think of into small bite-sized pieces that agents can tackle really well instead of trying to eat the elephant."

This architectural approach decomposing complex security investigations into discrete agent tasks has proven successful at Prophet Security, where they've achieved remarkable results: "Our average time to complete an investigation is right around four minutes" compared to traditional SOC teams that "won't even start to look at an alert in four minutes, much less complete the investigation in that time."

The consistency problem that Grant identified is critical for regulated environments. In a recent customer engagement, Prophet Security processed 12,000 investigations over two weeks and "had 99.3% agreement between their security operations team and Prophet during that 12,000 investigation stint. We were significantly faster with it. It was 11x the difference in the meantime to investigate."

The Twin Pillars: Explainability and Traceability

For organizations in regulated industries healthcare, financial services, government trust in AI SOC capabilities rests on two fundamental requirements that Grant identifies: explainability and traceability.

Explainability addresses whether the AI's decision-making process is reasonable and understandable. "Given this particular situation, is it reasonable to make the decision here," Grant explains. "In an explainable way, is the analysis understandable and reasonable for a security practitioner?" This mirrors how human analysts would be evaluated not just on their conclusions, but on whether their reasoning makes sense given the evidence available.

Traceability focuses on the data lineage throughout an investigation. "What queries are the AI SOC issuing API requests issuing across different technologies? What queries is it issuing across your SIEM? What are the requests that it's making? What are the responses that it's returning?" Grant details. "Really tracing the inputs and the data transformation that's happening."

The difference from traditional SOC operations is striking. Where human analysts might copy-paste a few relevant log entries into their investigation notes, AI SOC platforms capture everything: "The average investigation is 40 to 50 queries across six different tools in a customer's environment. Instead of just having best effort copying and pasting of logs from a traceability perspective, you have a line by line detail of everything that was gathered, all the evidence that was gathered, and all the decision making that happened along the way."

Grant emphasizes that regulators are demanding a "10x magnification" in explainability and transparency for AI SOC compared to human-driven operations, "just given the skepticism with AI and the work product." This heightened standard actually benefits customers even those not in regulated industries by providing unprecedented visibility into security investigations.

Design move: Reject any AI SOC or MCP setup where you can’t see the raw queries, tool calls, and evidence chain for each decision. If you can’t audit it, you can’t defend it to a regulator.

Architecture for Data Sovereignty and Compliance

One of the most critical architectural decisions for AI SOC in regulated environments involves data management. Grant outlines Prophet Security's approach: "We're not a SIEM so we don't require you to stream all of your data to Prophet to make decisions. We make point in time queries across your different security tools to make decisions much like a person would."

This architecture dramatically reduces data exposure. "The subset of data that we're processing is much, much, much smaller and much more focused, which gets us out of a lot of problems from a data management perspective," Grant explains. Customers also maintain granular control: "Customers have total control of what they want to send to us and the capabilities that Prophet can issue."

For healthcare organizations dealing with HIPAA compliance, this flexibility is essential. Grant shares an example: "We have a healthcare regulated customer that said, 'Hey, my HIPAA data, I'm just not even gonna put that in the purview of Prophet to look at.' And we're gonna start without going down that path and we can explore it later."

Beyond selective data exposure, Prophet Security offers a "bring your own cloud" model where "the data plane of our environment can live defensively within the organization's perimeter. This has been really meaningful for financial organizations for us. There's defensibility that they own all of the evidence and data. They can remove our access at any time or blow away the data plane and all of that raw evidence is gone."

This architectural approach addresses a fundamental concern in regulated industries: who controls the security evidence, and can that access be revoked immediately if needed?

Single-Tenant Architecture and the Training Data Question

One of the most common concerns Grant encounters is whether customer data is being used to train AI models. His answer is unequivocal: "Our architecture's entirely single tenant. There's no cross contamination and you can think of it like onboarding a new security staff member to the team, like it's a new analyst. All the learning that happens within your tenant stays in your tenant."

Prophet Security enforces this "to a contractual level that your data is never used for training in improving the model outside of it improves the product for you, but there's no model training on the raw data." This single-tenant approach is "another huge component that's meaningful for regulated environments."

Grant also addresses the emerging trend of model portability: "Model portability is something that we've invested in as well, where regulated industries may have their own model gateway, where there's specific models that they are comfortable with and others that they're not." This allows organizations to "manage the traceability of the inputs going to the model, they're paying the outbound costs and able to manage that through their own AI governance process."

Design move: Treat “no cross-tenant training on my data” as a non-negotiable contractual requirement for regulated workloads.

AI SOC vs. MDR: Replacement or Complement?

For organizations already invested in Managed Detection and Response services, Grant sees AI SOC as both a potential replacement and a complementary solution, depending on the use case.

"We have many customers that are moving from MDR to AI SOC as their holistic approach for doing investigations," Grant notes. The speed advantage is compelling: traditional MDR teams "won't even start to look at an alert in four minutes, much less complete the investigation in that time. So there's just sort of a response velocity that can't be contended with."

However, some organizations take a hybrid approach. "We have a few customers that coexist and have both," Grant explains. "AI SOCs aren't scared by custom detections or things that your team has generated. That's often 20 to 30% of the burden of a security operations team, where they have specific applications or custom detections that their security engineering team has developed that their MDR can't reasonably look at."

The scalability difference is fundamental: MDR providers serve hundreds or thousands of customers and "focused on the base case that's consistent across all of those customers." Custom detections don't scale for human-driven MDR, but "on an AI SOC level, you know, one that's deployed for your environment specifically, it scales perfectly well."

Grant sees customers using this division of labor: "My MDR contract is going for another two years or three years, but I still have all these custom detections that are a problem for me. I would love to put AI SOC on that and then visit if this is a replacement or expand my budget spend to bring Prophet in in a broader way when that renewal is over."

The Reality of Building Your Own AI SOC

For organizations considering building AI SOC capabilities internally, Grant offers a realistic assessment: "It's a really hard problem. I think it's a fun thing to experiment with. I think there's a lot you can do on your own to build sort of enrichment and build context, but trusting decision making and that consistency with AI agents, very hard to do in an internal organization."

Grant has encountered creative attempts where teams "built a workflow that's in their store or something else where they go grab data and then they make an LLM call and then they send the data back and they make another LLM call. And it's very strict logic to try to make a single investigation work." The problem? "Now I've gotta do it for the other 500 detections in my environment. And I was like, that sounds like a chore. That doesn't sound like an answer to your problem. You're just focusing your energy in a different place."

The operational challenges extend beyond initial implementation. "Model providers change their models, and so without writing a lot of code, you might see a 3% improvement somewhere else, or a 0.5% degradation in consistency or quality of analysis," Grant explains. "We have an entire machine learning team that is looking at all the different model providers out there. You have a whole operational team that's kind of wrangling the weather, so to speak."

Grant's recommendation: "Play around with it, get an understanding of how prompts work and how models work. But when you're looking to scale this and trust this and not worry about security problems, I would work, try out some AI SOCs and see how it compares to what you build."

The Model Context Protocol Caution

For security leaders evaluating AI SOC technologies, Grant issues a specific warning about the Model Context Protocol (MCP): "MCP adds a whole other layer where there are MCP servers where you want to make it very easy to grab this information from your environment. But when you talk about regulated environments specifically, transparency is lost."

The problem is fundamental: "When I ask an MCP agent a question, it'll give me an answer, but it won't give me the query that it ran to generate the answer. And so there's a mismatch in auditability from our perspective to make this clear to you, a human, as to what happened, and that break in the chain is just one too many black boxes in the cycle."

Prophet Security made a conscious decision to "build our own collection agents for that transparency piece" rather than rely on MCP. Grant is "hopeful that MCP agents can expand to be more scrutinous on the auditability side and have some sort of REST API response that was queried on their end and tracked along with the results and send this entire package over to maintain that evidence chain of custody."

For organizations considering building their own AI SOC: "MCP is the fastest way to do it in a lot of cases, but who knows what's happening on a bunch of different levels at that point."

Red Lines: What AI Shouldn't Automate (Yet)

When it comes to automated remediation, Grant advocates for constrained creativity: "Agents are very creative, maybe a nicer way of saying probabilistic. If you were to give an AI agent, 'Hey, go and remediate this file on a system,' there's several different ways that it could go and do that."

His approach: "I am a big believer today in strict coupling of, instead of AI having creativity on the query part, there's a specific API that an agent is allowed to access. It performs one function, and this is the capability that the agent has in your environment."

Grant recommends treating remediation actions "more as a traditional integration in that sense, where AI SOC is managing the reasoning of when this is appropriate. But the action is a single call or a series of calls that are more deterministic."

The risk assessment is straightforward: "If agents are inspired and agentically performing remediative actions in your environment rather than coming up with the playbook of things that you should do and aligning those to REST API calls, that gets a little scary in redlining it in my opinion."

For organizations comfortable with MDR-driven remediation, the risk profile is actually lower with AI SOC: "If you're working with subprocessors or MDRs that are using your PHI or PII data to do investigations, you're probably comfortable having an AI SOC do the same thing since it's the same process and honestly less risk because there's no human that's going to go and copy and paste this to some resource and share it on the internet."

Design move: Let the AI decide when to remediate; keep how locked to a small set of deterministic, pre-approved API calls.

The Path to Autonomous Operations

Currently, Prophet Security closes "95 plus percent of our investigations automatically as false positive with all of that explainability and transparency. For that additional 5% of things that are malicious or that we have a question on, we think bringing in a human is still the right approach today just to have eyes on, validate the activity, confirm remediation actions and move on."

But Grant sees this evolving: "I think that's more of where the market is and less of where the product is. I think we're gonna move more and more to a state where only if you have an issue, a threat has been identified and it's been remediated in three minutes. This has all happened. You get a rollup report of what's been observed and your team isn't escalated to because the threat was mitigated. There's no further action to do."

The technology is ready, but operations teams may not be: "I actually don't think the operations teams are ready to be there, and that's okay. I think trust becomes the important element." This mirrors the broader theme of Grant's insights that successful AI SOC adoption in regulated or non-regulated environments ultimately comes down to building trust through transparency, explainability, and demonstrated consistency.

The Future: From Investigation to Security Program Management

Looking forward, Grant sees AI SOC evolving beyond individual alert triage: "Where I see AI SOC continuing to move is taking the groundwork out of SOC operations, the grunt work level tasks out of people's purview. Instead, operate more as a manager in the loop where I'm managing my detection program."

This includes capabilities like threat hunting: "How can I ask bigger hypothesis driven questions and have AI systems go and pull larger sets of data for me and start to find unidentified threats in my environment?"

It also encompasses detection management and posture: "How can I look over alerts that I've seen in the past, identify my gaps in line with like MITRE ATT&CK framework or similar and suggest tuning recommendations?"

The vision is for security professionals to shift from conducting individual investigations to orchestrating an AI-driven security program: "Have that orchestration happen by an army of agents and you're getting the feedback to make decisions on whether this is helpful or expending energy that's unneeded in your organization."

Grant's perspective: "I continue to think that AI SOC is going to shift to build security programs that I honestly dreamed of in past places."

MITRE ATT&CK Framework - Knowledge base of adversary tactics and techniques for detection planning

NIST AI Risk Management Framework - Framework for managing AI system risks in regulated environments

EU AI Act Compliance Resources - European Union AI regulation guidance

FedRAMP Authorization for Cloud Services - Federal authorization program for cloud service offerings

Question for you? (Reply to this email)

🤖 What would it take for you to let an AI SOC auto-close alerts?

Choose 1 or both or something else:

A time threshold (e.g., “sub-5-minute investigations”),

The minimum audit trail you’d need to sign off.

Next week, we'll explore another critical aspect of cloud security. Stay tuned!

📬 Want weekly expert takes on AI & Cloud Security? [Subscribe here]”

We would love to hear from you📢 for a feature or topic request or if you would like to sponsor an edition of Cloud Security Newsletter.

Thank you for continuing to subscribe and Welcome to the new members in tis newsletter community💙

Peace!

Was this forwarded to you? You can Sign up here, to join our growing readership.

Want to sponsor the next newsletter edition! Lets make it happen

Have you joined our FREE Monthly Cloud Security Bootcamp yet?

checkout our sister podcast AI Security Podcast