- Cloud Security Newsletter

- Posts

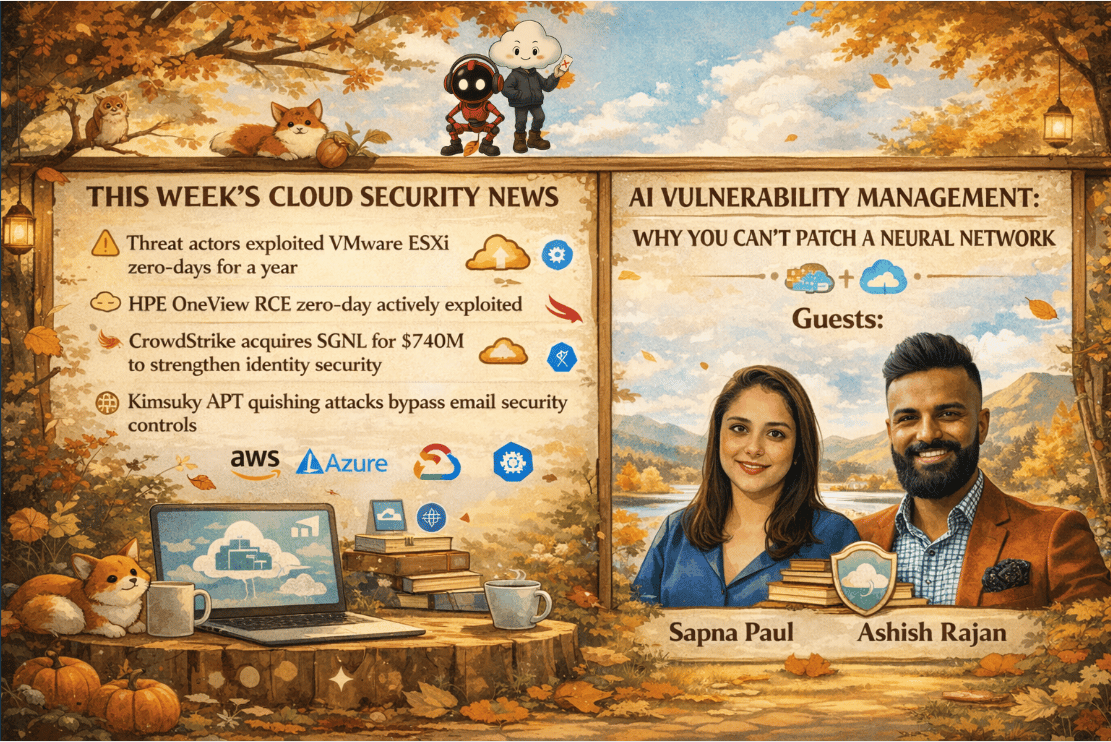

- VMware ESXi Zero-Days Exploited for Year: Lessons from Dayforce's AI-FirstVulnerability Management Strategy

VMware ESXi Zero-Days Exploited for Year: Lessons from Dayforce's AI-FirstVulnerability Management Strategy

This week's newsletter covers critical enterprise vulnerabilities including year-long VMware ESXi exploitation by Chinese threat actors, HPE OneView's maximum-severity RCE flaw, and CrowdStrike's $740M identity security acquisition. Plus, Dayforce's Sapna Paul shares how AI is transforming vulnerability management from scan and patch workflows to continuous observation, detection, and model retraining.

Hello from the Cloud-verse!

This week’s Cloud Security Newsletter topic: AI Vulnerability Management: Why You Can't Patch a Neural Network (continue reading)

Incase, this is your 1st Cloud Security Newsletter! You are in good company!

You are reading this issue along with your friends and colleagues from companies like Netflix, Citi, JP Morgan, Linkedin, Reddit, Github, Gitlab, CapitalOne, Robinhood, HSBC, British Airways, Airbnb, Block, Booking Inc & more who subscribe to this newsletter, who like you want to learn what’s new with Cloud Security each week from their industry peers like many others who listen to Cloud Security Podcast & AI Security Podcast every week.

Welcome to this week’s Cloud Security Newsletter

As we enter the first full week of 2026, a troubling pattern is emerging: sophisticated threat actors are exploiting critical infrastructure vulnerabilities months, even years before public disclosure, while enterprises struggle to secure increasingly dynamic, AI-driven assets.

This week, Sapna Paul, Senior Manager of Vulnerability Management at Dayforce, joins us to explain why traditional scan-and-patch workflows are breaking down — and how AI is forcing a fundamental rethink of what “vulnerability management” actually means.[Listen to the episode]

📰 TL;DR for Busy Readers

Chinese threat actors exploited VMware ESXi zero-days for 12+ months before disclosure, targeting 30,000+ exposed instances

HPE OneView vulnerability (CVSS 10.0) actively exploited, CISA sets January 28 remediation deadline for infrastructure management platforms

CrowdStrike acquires SGNL for $740M, signaling major consolidation in identity security and zero-trust markets

AI vulnerability management requires new approach: observe, detect anomalies, retrain models—not just scan and patch

FBI warns North Korean Kimsuky APT using QR code phishing to bypass email security and harvest credentials via mobile devices

📰 THIS WEEK'S TOP 5 SECURITY HEADLINES

1. Chinese Hackers Exploit VMware ESXi Zero-Days a Year Before Disclosure

Security researchers at Huntress uncovered a VM escape toolkit (“MAESTRO”) used by Chinese threat actors to exploit three VMware ESXi zero-days:

CVE-2025-22224

CVE-2025-22225

CVE-2025-22226

Active since: Feb 2024

Disclosed: March 2025

Exposed instances: 30,000+

Why This Matters

Why Security Leaders & Teams Should Care

Hypervisors remain silent, high-impact targets

Signature-based scanning failed for a full year

VM escape = total trust boundary collapse

Action

Patch ESXi immediately

Enable hypervisor-level monitoring

Reduce ESXi internet exposure

Segment management planes

Virtualization platforms remain high-value APT targets.

2. HPE OneView Zero-Day Exploited in the Wild (CVE-2025-37164)

A maximum-severity vulnerability in HPE OneView was added to CISA’s KEV list.

Remediation deadline: January 28, 2026

Why This Matters

Infrastructure control planes are becoming single points of enterprise failure.

Action

Patch immediately

Restrict OneView network access

Monitor admin activity and command execution

Infrastructure management platforms are becoming single points of failure.

Sources: CISA KEV Catalog, ITPro

3. CrowdStrike Acquires SGNL for $740M

CrowdStrike announced its acquisition of SGNL, expanding into AI-powered continuous authorization.

Why This Matters

Traditional IAM struggles with:

Static access policies

Multi-cloud fragmentation

Limited entitlement visibility

This acquisition reinforces trends toward:

Continuous authorization

Zero-trust architectures

Real-time access decisions

Sources: CNBC, CrowdStrike Announcement

4. FBI Warns: Kimsuky Using QR-Code Phishing (“Quishing”)

The FBI warns that North Korean APT Kimsuky is embedding malicious QR codes in emails to harvest credentials via mobile devices.

Why This Matters

Key risks include:

Mobile devices bypassing enterprise security

QR codes evading email inspection

Session token theft bypassing MFA

Recommended Actions

Deploy Mobile Threat Defense (MTD)

Treat mobile as lower-trust endpoints

Educate users on QR phishing

Monitor mobile authentication anomalies

Sources: FBI Flash Alert, Tech Radar

5. Insider Threat: Cybersecurity Professionals in BlackCat Ransomware

Two U.S. cybersecurity professionals pleaded guilty to acting as BlackCat/ALPHV ransomware affiliates, earning $1.27M.

Why This Matters

Key Takeaway:

Insider threats now include highly skilled defenders

Zero-trust must apply to privileged users

Vendor security assurance needs deeper scrutiny

Sources: U.S. Department of Justice, SecurityWeek

AWS Previews Security Agent

AWS announced the preview of Security Agent, an AI-powered penetration testing and security review platform that provides context-aware application security testing from design through deployment. The tool represents AWS's investment in AI-driven security automation, addressing the growing gap between application release velocity and security testing cadence.

Why This Matters

Traditional application security testing struggles to keep pace with modern CI/CD pipelines where organizations deploy code hundreds of times per day. AWS Security Agent attempts to solve this by:

Context-aware security testing

CI/CD-integrated AppSec

Developer-focused remediation

Organizations should validate AI findings and maintain human oversight.

Sources: AWS Security Blog

🎯 Cloud Security Topic of the Week:

AI Assets and the Evolution of Vulnerability Management

Traditional vulnerability management has operated on a simple premise: find the flaw, patch it, verify the fix. But what happens when the asset itself is a learning system that can develop flaws organically over time? As AI models become core enterprise assets powering everything from fraud detection to customer service security teams face a fundamental challenge: you can't patch a neural network the way you patch Windows Server.

This week, we explore how vulnerability management is evolving to address AI assets, based on insights from Sapna Paul's work at Dayforce. The implications extend beyond AI-specific risks to fundamentally reshape how we think about asset management, risk quantification, and security operations in dynamic environments.

Featured Experts This Week 🎤

Sapna Paul – Senior Manager, Vulnerability Management, Dayforce

Ashish Rajan - CISO | Co-Host AI Security Podcast , Host of Cloud Security Podcast

Definitions and Core Concepts 📚

Before diving into our insights, let's clarify some key terms:

AI Model Vulnerability: A flaw or weakness in an artificial intelligence model that can be exploited to manipulate outcomes, exfiltrate training data, or cause the model to behave incorrectly. Unlike traditional software vulnerabilities, AI model vulnerabilities often stem from traning data poisoning, adversarial inputs, or emergent behaviors that weren't present during development.

Neural Network: The underlying architecture of most modern AI systems, consisting of layers of interconnected nodes (neurons) that process information and learn patterns from data. In vulnerability management contexts, the neural network itself becomes the asset requiring protection, rather than just the application hosting it.

Model Retraining: The process of updating an AI model by feeding it new or corrected training data to modify its behavior. This is the AI equivalent of patching, but unlike applying a security patch, retraining can take weeks of compute time and requires extensive validation to ensure the model maintains accuracy while addressing the vulnerability.

Data Poisoning: An attack technique where adversaries inject malicious data into an AI model's training dataset, causing it to learn incorrect patterns or behaviors. This is particularly insidious because the vulnerability becomes embedded in the model's fundamental understanding, not just in its code.

Behavioral Monitoring (AI Context): Continuous observation of AI model outputs and decision-making patterns to detect anomalies that might indicate vulnerability exploitation, data drift, or emergent problematic behaviors. This replaces traditional point-in-time vulnerability scanning for AI assets.

NIST AI Risk Management Framework (AI RMF): A voluntary framework providing guidance for managing risks associated with artificial intelligence systems. It covers governance, trust, and responsible AI principles, serving as a de facto standard for U.S. organizations building or deploying AI systems.

This week's issue is sponsored by Push Security

Want to learn how to respond to modern attacks that don’t touch the endpoint?

Modern attacks have evolved — most breaches today don’t start with malware or vulnerability exploitation. Instead, attackers are targeting business applications directly over the internet.

This means that the way security teams need to detect and respond has changed too.

Register for the latest webinar from Push Security on February 11 for an interactive, “choose-your-own-adventure” experience walking through modern IR scenarios, where your inputs will determine the course of our investigations.

💡Our Insights from this Practitioner 🔍

AI Vulnerability Management: Why You Can't Patch a Neural Network (Full Episode here)

The Three Layers of AI Vulnerability Management

Sapna Paul fundamentally reframes vulnerability management for AI assets around three critical layers that security teams must address simultaneously:

Layer 1: Production Model Security

The first layer focuses on how adversaries can impact models actively serving users. "Have to keep analyzing the production models that are running, that are solving a use case," Sapna explains. "How an adversarial can impact the working of that model through red teaming and security testing of these models is so important."

This isn't traditional penetration testing it requires specialized techniques like:

Adversarial example generation to test model robustness

Prompt injection testing for language models

Boundary testing to identify model hallucination thresholds

Performance degradation analysis under attack conditions

For enterprise security teams, this means dedicating resources to ongoing model security assessment, not just pre-deployment validation. As Sapna notes, vulnerabilities in AI systems "can make AI become rogue" if left unaddressed.

Layer 2: Data Security and Integrity

The second layer addresses the training pipeline, the most critical attack surface for AI systems. "The vulnerabilities that can make their way into the model is through data, the training that is happening on the left side," Sapna emphasizes. "Poisoning, the biasness that an AI can learn is in the left side. So it's so important to understand what data is going in and the controls that need to be put around that data layer."

This requires security teams to:

Implement access controls on training datasets with full audit logging

Validate data integrity and provenance before training

Monitor for bias indicators in training data

Test models for signs of data poisoning (unusual decision boundaries, performance degradation on specific inputs)

The complexity here is that data poisoning attacks can be subtle. An attacker doesn't need to corrupt the entire dataset; carefully crafted examples injected into training data can cause specific failure modes that only activate under certain conditions.

Layer 3: Behavioral Ethics and Compliance

The third layer addresses what Sapna calls the "technically correct but ethically wrong" problem. "A model is giving you an outcome which is technically correct but ethically wrong if compliance is not there, AI can become rogue."

This layer requires entirely new evaluation frameworks:

Ethical review of model outputs across diverse scenarios

Demographic fairness testing to identify discriminatory patterns

Regulatory compliance validation (EU AI Act, state-level AI regulations)

Alignment testing to ensure model behavior matches organizational values

Consider a fraud detection model that accurately identifies fraud but disproportionately flags transactions from specific demographic groups. The model is technically working it's catching fraud but its operation violates fairness principles and potentially regulatory requirements.

"You have to make business understand what risk AI is bringing to your business," Sapna notes. "If you cannot tie back to the business or whatever your company's goals are, it's just not a useful risk register."

Shifting from Patching to Retraining: A Fundamental Workflow Change

Traditional vulnerability management follows a predictable cycle: discover vulnerability → apply patch → verify fix → move to next vulnerability. This workflow assumes the asset is static between updates.

AI assets break this model completely. "What would you do if an AI model has learned something wrong? There's no patch," Sapna explains. "There's no Patch Tuesdays. You can't just go there and patch the vulnerabilities. You have to take a step back, detect what it's doing, what weaknesses and flaws the model has, and then retrain."

The retraining process introduces complexity that traditional patch management never encountered:

Compute Resource Requirements: Model retraining can consume weeks of GPU time and cost tens of thousands of dollars for large models. This makes the "just patch everything" approach financially impractical.

Validation Cycles: After retraining, teams must validate that:

The vulnerability has been addressed

The model maintains its original accuracy and performance

No new failure modes have been introduced

Regulatory compliance is maintained

Deployment Risk: Deploying a retrained model carries risk that doesn't exist with traditional patches. A misconfigured model update could cause widespread service degradation or incorrect business decisions.

"It takes so many cycles of compute and weeks of testing and revalidation and redeployment," Sapna notes. "If I just put it in one line: you don't scan and patch anymore. You observe, you detect anomalies, and you retrain the model."

Asset Management Meets AI: When Assets Learn and Evolve

Perhaps the most fundamental shift Sapna describes is reconceptualizing what constitutes an "asset" in vulnerability management. "The asset is a neural network. The asset is a model, right? It's an AI, artificial intelligence. We have not done or assessed that sort of asset before in a typical traditional vulnerability management or cybersecurity space."

This creates several challenges for traditional asset management systems:

Dynamic Asset Behavior: Traditional assets have predictable behavior. A web server processes HTTP requests the same way today as it did yesterday. AI models evolve their behavior based on input patterns and can develop emergent properties that weren't present during testing.

Parameter Space Complexity: "There are billions of parameters, billions of different things you have to think about when you are doing vulnerability management in this space," Sapna explains. Traditional configuration management might track hundreds or thousands of settings; AI models have billions of weights and connections that collectively determine behavior.

Lack of Determinism: Unlike traditional software where the same input reliably produces the same output, AI models incorporate randomness and can produce different results for identical inputs. This makes vulnerability reproduction and testing significantly more complex.

For security teams, this means rethinking asset inventory practices:

Document not just the model, but its training data lineage, hyperparameters, and validation metrics

Track model versions with the same rigor as critical infrastructure

Maintain test datasets that can verify model behavior over time

Establish baselines for "normal" model behavior to detect drift or compromise

Speaking the Language of Data Teams: Bridging the Security-AI Gap

One of Sapna's most valuable insights addresses the communication gap between security and AI development teams. "You have to talk in the language that data people understand. If you go and say your model has this security vulnerability, they'll be like, 'What? No.' But if you go and say your model is going to be 30% less effective because an adversarial can do something to it and change its scores, now you can sit with that person and make them understand."

This reframing is critical because:

Different Risk Perspectives: Security teams think in terms of vulnerabilities and exploits. Data science teams think in terms of model accuracy, precision, and recall. The same issue must be translated across these mental models.

Business Impact Translation: Instead of technical security jargon, frame AI vulnerabilities in terms of:

Model performance degradation under attack

Business revenue impact from compromised models

Regulatory penalties from biased or non-compliant model behavior

Reputational damage from AI failures

Upskilling Requirements: "For that, we need to upskill as well. Security compliance people need to upskill their AI concepts, knowledge, subject matter... so you can talk in their language," Sapna emphasizes.

Organizations should invest in:

AI fundamentals training for security teams

Security awareness training for data science teams

Cross-functional working groups that bring both disciplines together

Shared metrics that matter to both security and AI teams

Governance Over Perfection: The Pragmatic Path Forward

Sapna offers a refreshingly pragmatic perspective on AI security: "You can't really have a perfect system. An ML model cannot just explain how it is reaching that particular outcome. So you won't have perfect explainability, but what you can have is good governance of AI systems."

This governance-first approach recognizes that:

Explainability Has Limits: Many of the most powerful AI models (deep neural networks, ensemble methods) are inherently difficult to fully explain. Demanding complete explainability would prevent deployment of valuable AI systems.

Governance Provides Guardrails: Instead of trying to make AI perfect, focus on:

Version control for models (like Git for code)

Audit trails of data access and model training

Security gates in ML pipelines

Continuous monitoring of production models

Incident response procedures for AI failures

"If governed properly and audited properly, these can become big enablers for people who are trying to get AI models out the door," Sapna notes.

Using AI to Manage AI Vulnerabilities: The Meta-Solution

Perhaps most intriguingly, Sapna describes how AI itself can help manage the vulnerability burden that AI creates. "Use AI in your workflow if you have not done that. Start thinking and start planning for it... You can use AI to lessen and lessen the burden of vulnerabilities on our stakeholders."

Practical applications include:

Intelligent Prioritization: AI can analyze vulnerability data alongside threat intelligence, asset criticality, and exploitability to surface the most critical risks. "It needs a lot of planning. You can't just use public models and start giving vulnerability data to it... In a more contained environment, how can you take the model, feed the data it needs to, and then see what outcomes you can get from the model to help prioritize even farther than you are prioritizing today."

Operational Efficiency: "Your analyst hours can be saved if you just give them the magic of AI," Sapna explains. Instead of spending time on coordination and manual data collection, analysts can focus on vulnerability research and quality assessment. "All those traditional workflows can just die. The data would be on the plate for you to investigate and quality assess."

Persona-Based Assistants: Sapna describes implementing AI bots tailored to specific user roles: "Think about the personas that you deal with in your day-to-day life. Put yourself in those shoes. Write a prompt that if I am a system engineer and I need to patch through these servers in this weekend cycle, what should I focus on?"

The key is providing these AI assistants with appropriate context:

Current vulnerability data and compensating controls

Asset criticality and business impact

Patch schedules and maintenance windows

Historical patching success rates

The Build vs. Buy Decision for AI Security Tools

Organizations face the critical decision of whether to build custom AI security capabilities or purchase commercial tools. Sapna's guidance emphasizes starting with available resources:

Cloud Provider Platforms: "Every company is using a cloud provider... Every cloud provider has an AI platform. You have access to their own platform. You can just go to your cloud service provider platform, start using AWS Bedrock, Azure OpenAI Foundry... start writing prompts of what would help me in my job."

Vendor Tool AI Features: "Plug AI into your existing workflow systems like ServiceNow or Jira they have their own AIs as well. Each tool has their own AI, and these companies are doing a great job in giving that functionality to users."

Gradual Adoption: "Start with one video and just make a note that you have to see one video every week on AI," Sapna advises. "Start with a basic prompt first. This will improve over time. Once you put it in their hands, your users, you will be amazed to see how they can build such great prompts."

The message: don't let perfect be the enemy of good. Start experimenting with AI tools using low-stakes use cases, learn from the results, and gradually expand to more critical applications.

Compliance and Innovation: Complementary, Not Contradictory

Sapna challenges the common perception that compliance and security slow innovation, particularly with AI. "Compliance and innovation go hand in hand. They complement each other. Compliance allows you to put guardrails in your AI. If compliance is not there, if risk and assessment is not there, AI can become rogue."

The key is framing security as an enabler:

Guardrails Enable Speed: By establishing clear security boundaries, organizations can move faster with AI deployments because they've pre-approved certain architectures and controls. Teams don't need to reinvent security for each new AI project.

Shift-Left Principle: "You have to shift left. You can't think compliance or security is an afterthought. From the moment you have started your pipeline, from the moment the data comes into your organization, it has to be within certain guardrails."

Practical implementation:

Access controls on training data with full audit trails

Version control from day one of model development

Integrity testing and bias detection in data pipelines

Security gates at each stage of the ML pipeline

"That mindset needs to be there to see them as enablers, not blockers," Sapna emphasizes. "And I think that is also relevant to other innovations, not just AI."

Future Skills: What Vulnerability Management Teams Need

Looking ahead, Sapna outlines the evolving skill requirements for vulnerability management professionals: "You just have to have …to learn AI…”

Cloud Security Podcast

Question for you? (Reply to this email)

🤖 Should AI agents be treated as “identities” with least privilege and audit trails or as tools your org implicitly trusts?

Next week, we'll explore another critical aspect of cloud security. Stay tuned!

📬 Want weekly expert takes on AI & Cloud Security? [Subscribe here]”

We would love to hear from you📢 for a feature or topic request or if you would like to sponsor an edition of Cloud Security Newsletter.

Thank you for continuing to subscribe and Welcome to the new members in tis newsletter community💙

Peace!

Was this forwarded to you? You can Sign up here, to join our growing readership.

Want to sponsor the next newsletter edition! Lets make it happen

Have you joined our FREE Monthly Cloud Security Bootcamp yet?

checkout our sister podcast AI Security Podcast